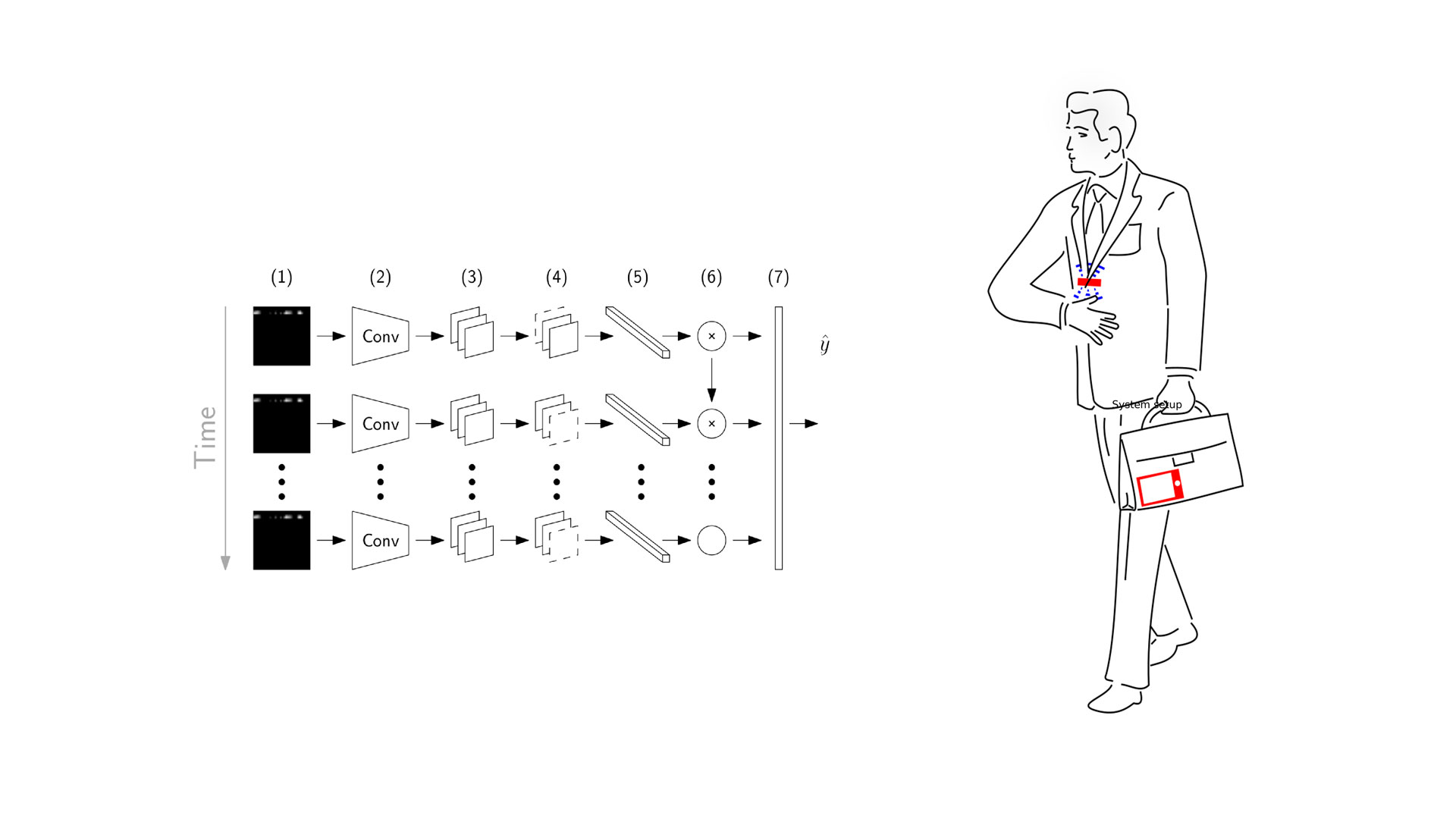

Using radar-on-chip sensors such as Google Soli and machine learning algorithms we explore the world of microgestures. The goal is to build systems that can: (i) sense hand and finger gestures in order to enable inconspicuous, precise and flexible object oriented interactions with everyday objects; (ii) enable sensing even in situations where the sensor is occluded by materials (e.g. phone in pocket or a leather bag); and (iii) perform sensing with low energy consumption.

Besides publications (see list bellow) all outputs of the project (datasets, models, software and other tools) are also available under GPL license within GitLab repository: https://gitlab.com/hicuplab/mi-soli.

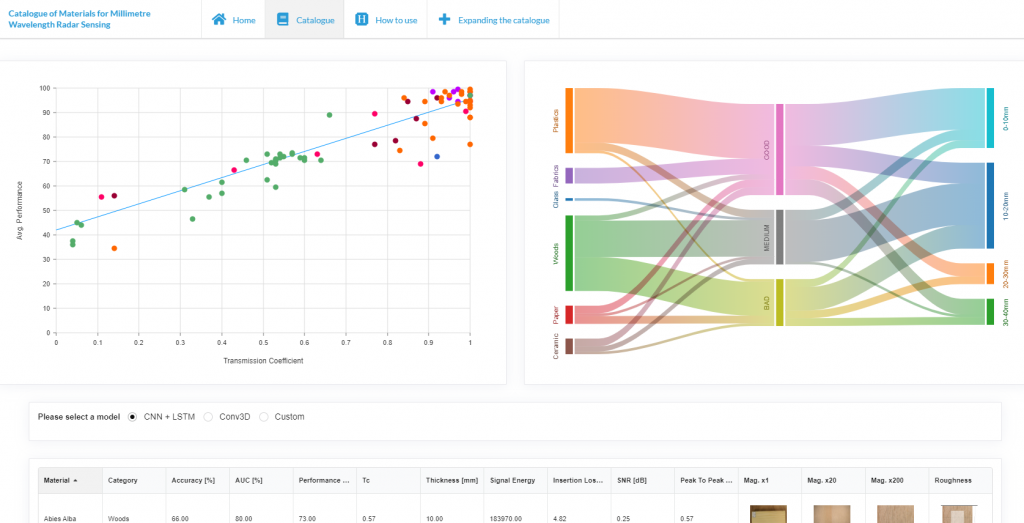

We also built a web app that offers an interactive Catalogue of materials which should assist application designers select suitable materials for interaction. https://solidsonsoli.famnit.upr.si/catalogue

Publications:

Čopič Pucihar, K., Sandor, C., Kljun, M., Huerst, W., Plopski, A., Taketomi, T., … & Leiva, L. A. (2019, May). The missing interface: micro-gestures on augmented objects. CHI 2019, https://doi.org/10.1145/3290607.3312986

Leiva, L. A., Kljun, M., Sandor, C., & Čopič Pucihar, K. (2020, Oct). The Wearable Radar: Sensing Gestures Through Fabrics. MobileHCI 2020, https://doi.org/10.1145/3406324.3410720,

Attygalle, N.T.; Leiva, L.A.; Kljun, M.; Sandor, C.; Plopski, A.; Kato, H.; Čopič Pucihar, K. No Interface, No Problem: Gesture Recognition on Physical Objects Using Radar Sensing. Sensors 2021, 21, 5771. https://doi.org/10.3390/s21175771, GitLab: https://gitlab.com/hicuplab/mi-soli

Čopič Pucihar, K., Attygalle, N. T., Kljun, M., Sandor, C., & Leiva, L. A. (2022). Solids on soli: Millimetre-wave radar sensing through materials. Proceedings of the ACM on Human-Computer Interaction, 6(EICS), 1-19. https://doi.org/10.1145/3532212 PDF: https://orbilu.uni.lu/bitstream/10993/50488/1/soli-radar-materials.pdf